Evaluation scares me

Until recently, I was never too excited about evaluation. I mean come on. No kid grows up saying “I wanna become an evaluator!” (Those words would sound terrifying in a child’s voice.) Evaluation always seemed like an afterthought to me. A process that happens after your project—where you sit down, stare at spreadsheets, and attempt to justify what you did.

“Oh wow, 18 more people accessed our services than last time! We must be doing well!”

Sure, that sounds good, but what if those 18 people had to sit through my attempts at comedy? What if, when I used to work with youth, I scarred them for life with conflicting advice??? It’s important to look at metrics but they’re easy to misinterpret and misrepresent. That’s why evaluation was never too hot on my radar. I’d seen too many people fudge numbers to have faith in them.

Despite my efforts, my attempts over the years to avoid evaluation have always ended in failure. From strange encounters with evaluators to working on frameworks to becoming an evaluator myself, I’ve learned to appreciate evaluation as a craft. It can be valuable work that’s so much more than the half-hearted, somewhat dishonest process I always assumed it was.

My perspective started to shift while I was an intern at Mass Culture. I started my co-op term doing the usual non-profit sorta stuff. You know, running events, helping with program development, breaking kneecaps (I’M JOKING)—that sorta thing. At some point, I was asked if I wanted to take the reins as an evaluator for the Research in Residence: Arts’ Civic Impact project. The name was a mouthful and I barely had any idea what evaluation was but I still said yes. ‘Cause why not? I’m always down to try new things.

And I’m glad I tried THIS THING.

The project

Research in Residence: Arts’ Civic Impact is an experimental initiative. A nation-wide project where universities (e.g. Dalhousie University) and arts organizations (e.g. Culture Days) collaborate to tread new ground. Each organization hosts a graduate student whose goal is to create a qualitative impact framework that informs the organization’s practices. These frameworks would be based upon research done on a topic such as diversity and inclusion or Indigenous cultural knowledge (chosen by the researcher at the outset of the program). The intention is that this research and its results benefit the host community and the public in general. There are five of these research residencies happening concurrently, led by a total of 6 student researchers (one is a pairing while the rest are working solo, albeit with the help of their academic advisors).

My role was to evaluate the project using an approach called developmental evaluation (DE). This approach is different from traditional evaluation in the sense that it’s focused on innovation and adaptation rather than sheer efficiency . The most significant difference is that DE is not summative. Rather, it’s embedded into a project from the start.

Developmental evaluators are highly relational in their work, spending much of their effort on relationship-building and getting familiar with the various players and components of a project. While they use many of the same tools as their more traditional counterparts (e.g. surveys, interviews) their mindset is quite different. Evaluating a project while it’s still ongoing means that decision-making becomes more robust. Potential issues can be addressed early before becoming major concerns. Directional pivots become more seamless.

Developmental evaluation is also more participatory. Since developmental evaluators make it a point to become a familiar face to those executing the activities being evaluated, they are able to gain a more in-depth understanding of what’s going on within an organization or project. The findings that the developmental evaluator uncovers are also meant to be shared with the community. Everyone gets a say in the evaluation.

You see the above sales pitch for developmental evaluation? It took me six months to learn how to articulate that. I had to go from learning what evaluation EVEN IS to having to learn a different approach altogether. I was not without support though. The folks at Mass Culture were so helpful in providing me resources to learn from. They even enrolled me in an online class! Most importantly, they connected me with Jamie Gamble: independent consultant and rockstar evaluator. He’s quite respected in the field. (A realization I came to after months of calling him the “Terminator”.) Jamie acted as a coach/mentor figure throughout the process. He would point me in the right direction, talk me through his process, and give some all-around banger advice. I made it a point to hijack our meetings half-way through and turn them into mentorship sessions. He’s that good.

Anyways, an interesting aspect of this project was the fact that I was doing research where the end result wasn’t an academic paper. Nor was it for the purpose of selling stuff like the market research I currently do at my day job. I had a freedom from academic and capitalist confines I had never experienced. The world was my oyster!

I decided on conducting the evaluation in two phases lasting three months each. My main instrument was the qualitative interview. I’d create questions based on the project’s evaluation framework and interview people involved in all aspects of the work. I spoke to the grad students, their academic supervisors, their host organizations, and the project’s funders. I also collected some data by watching video diaries recorded by a couple of the people who facilitated this project. I took a grounded theory approach to analyzing this data. Coding the transcripts and using inductive reasoning to come up with conclusions.

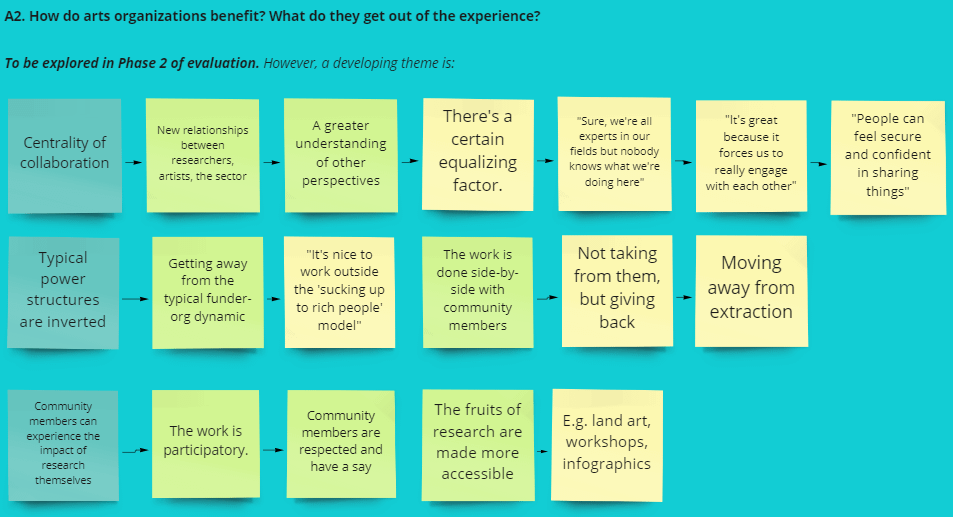

You may be wondering, “Isn’t this just standard qualitative research?” Yes, up to this point it was. The “developmental” part shows in how I presented this data. I condensed my pile of notes into bite-sized points and organized them according to prominent themes. This was then put onto a virtual whiteboard on Miro. Then, Jamie and I organized a sensemaking meeting with the people involved in the project. We’d gather them in a room, present the broad strokes, give people a chance to go over the data themselves, then spark a discussion about it. This format is similar to a focus group but except it’s looser in structure. There’s plenty of space provided to discuss emergent themes. For example, the Phase One sensemaking session had some interesting discussion on power dynamics. “How does the structure of this project influence power structures? How are we as a group navigating power dynamics?” Fascinating stuff.

Phase One of the evaluation was conducted wholly online while Phase Two culminated at a conference in Ottawa. It was fun connecting with the people I had interacted so much on the web. There’s something to be said about being in a room with others as passionate as yourself. It’s also rewarding to physically witness the impact of your work.

There’s a power in gathering. In communing.

Evaluation is hella cool

This project really widened my perspective on evaluation. Finally, I’m able to understand how valuable evaluation (and research in general) can be in the arts sector.

Something I noticed when speaking with arts organizations is that researchers can contribute so much to their work. Having a person on board whose sole purpose is to seek knowledge means that there’s no shortage of fresh ideas or perspectives. It’s not that organizational staff CAN’T seek out this knowledge themselves. It’s just that they’re often overworked. You know, with having to wear five hats and fight for funding and all. Knowledge is a valuable resource.

Another pattern that stood out was the fact that the more mature (in terms of life-span) organizations seemed to have a greater understanding of evaluation. With some having internal evaluation practices of their own. I’d like to propose the idea that a strong evaluation culture is a natural, integral part of an organization’s development. I’ve been involved with so many “homie in their garage” type organizations that would’ve benefited from streamlined evaluation practices.

Taking a developmental evaluation approach also facilitated a greater sense of cohesiveness among the people working in the project. I think that involving people in the evaluation from the start gives them more of a say. People were able to express their opinions and see any concerns be addressed. People also had a greater understanding of the evaluation process as a whole.

Evaluation in general is often misunderstood because people don’t really know how to go about it. I hope that changes in the future.

Bridging the gap

So far, I’ve talked about this project from my perspective as a researcher. But what about as an artist? As a human?

I’m used to being in scrappy, DIY, grassroots-type spaces. Communities where people don’t really have a sense of “the arts sector.” This project had me working sector-side with funders, directors, board members, etc. people who would normally be on the other side of the table. People where our normal interactions would involve begging them for money haha. It’s still a flex among my friends if any of us get funding for our work.

Working side-by-side with funders made me realize that they aren’t as intimidating as they seem. Sure, there may be misunderstandings and differences in opinion, but at the end of the day, we all want what’s best for our greater arts community. I think part of the apprehensiveness from artists comes from the fact that we rarely interact with people who are more sector-side. It would help bridge the gap if we had more chances to interact outside the context of seeking grants and such.

I saw a similar breaking of barriers among the academics and organizations I worked with. I think because Research in Residence is such a novel, uncharted concept, it’s hard for anyone to say they know 100% how to go about things. This seemed to help academics and organizations feel more collegial rather than adversarial. People were less on guard and more willing to share.

That’s the goal isn’t it?

All in all

Evaluation in general is often misunderstood because people don’t really know how to go about it. Heck, I still have lots to learn! Still, I realized how much fun this sort of work can be. I’ll definitely be carrying a more evaluation-minded perspective in the future.

It’s rare for me to get opportunities to combine my passions for art and research. The two realms work well in tandem: research for knowledge-gaining and art for communicating and expressing what we learn.

The fact that, through this project, we’re exploring non-traditional means of sharing findings means that this research is more participatory. I’m so stoked how arts-based communication methods can better facilitate knowledge-sharing. Especially with people like some of my loved ones who don’t even know what “qualitative” means, or are distrustful of research in general.

Research doesn’t have to be this ivory tower, brainiac pursuit for people who know fancy words. It can be for everyone.

It SHOULD be for everyone.